-

Notifications

You must be signed in to change notification settings - Fork 14

Parallel Processing

Including the Important feature of this program is that, at all phases of the program, you can perform parallel processing. Many personal computers and workstations have two or four cores (that is, CPUs) that enable multiple threads to be executed simultaneously. Computers in the near future are expected to have significantly more cores. To take advantage of the hardware of today and tomorrow, you can parallelize your program to distribute work across multiple processors. In the past, parallelization required low-level manipulation of threads and locks.

What is Parallel Computing?

- Traditionally, software has been written for serial computation:

- To be run on a single computer having a single Central Processing Unit (CPU).

- A problem is broken into a discrete series of instructions.

- Instructions are executed one after another.

- Only one instruction may execute at any moment in time.

- In the simplest sense, parallel computing is the simultaneous use of multiple compute resources to solve a computational problem:

- To be run using multiple CPUs

- A problem is broken into discrete parts that can be solved concurrently

- Each part is further broken down to a series of instructions

- Instructions from each part execute simultaneously on different CPUs

-

The compute resources can include:

- A single computer with multiple processors.

- An arbitrary number of computers connected by a network.

- A combination of both.

-

The computational problem usually demonstrates characteristics such as the ability to be:

- Broken apart into discrete pieces of work that can be solved simultaneously.

- Execute multiple program instructions at any moment in time.

- Solved in less time with multiple compute resources than with a single compute resource.

Optimally, the speed-up from parallelization would be linear—doubling the number of processing elements should halve the runtime, and doubling it a second time should again halve the runtime. However, very few parallel algorithms achieve optimal speed-up. Most of them have a near-linear speed-up for small numbers of processing elements, which flattens out into a constant value for large numbers of processing elements.

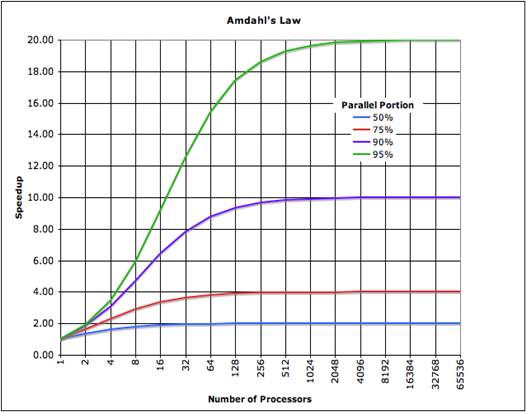

The potential speed-up of an algorithm on a parallel computing platform is given by Amdahl's law, originally formulated by Gene Amdahl in the 1960s.1 It states that a small portion of the program which cannot be parallelized will limit the overall speed-up available from parallelization. Any large mathematical or engineering problem will typically consist of several parallelizable parts and several non-parallelizable (sequential) parts. This relationship is given by the equation:

S = 1 / (1-P)

Where S is the speed-up of the program (as a factor of its original sequential runtime), and P is the fraction that is parallelizable. If the sequential portion of a program is 10% of the runtime, we can get no more than a 10× speed-up, regardless of how many processors are added. This puts an upper limit on the usefulness of adding more parallel execution units. "When a task cannot be partitioned because of sequential constraints, the application of more effort has no effect on the schedule. The bearing of a child takes nine months, no matter how many women are assigned."2

Gustafson's law is another law in computing, closely related to Amdahl's law. It can be formulated as:

S(P) = P - α*( P - 1 )

where P is the number of processors, S is the speed-up, and α the non-parallelizable part of the process.3 Amdahl's law assumes a fixed problem size and that the size of the sequential section is independent of the number of processors, whereas Gustafson's law does not make these assumptions.

A graphical representation of Amdahl's law. The speed-up of a program from parallelization is limited by how much of the program can be parallelized. For example, if 90% of the program can be parallelized, the theoretical maximum speed-up using parallel computing would be 10x no matter how many processors are used.

A graphical representation of Amdahl's law. The speed-up of a program from parallelization is limited by how much of the program can be parallelized. For example, if 90% of the program can be parallelized, the theoretical maximum speed-up using parallel computing would be 10x no matter how many processors are used.

To set or change priority or affinity of process for the program, click on Process Setting menu, to see the Set Priority and Set Affinity option.

Copyright ©2018 [Bezzad [email protected]) All Rights Reserved.

-

Welcome

-

Features

-

Using Menu Bar

- File

- Tools

- Process Setting