API monitoring & analytics made simple

Metrics, logs, and alerts for your serverless APIs — with just a few lines of code.

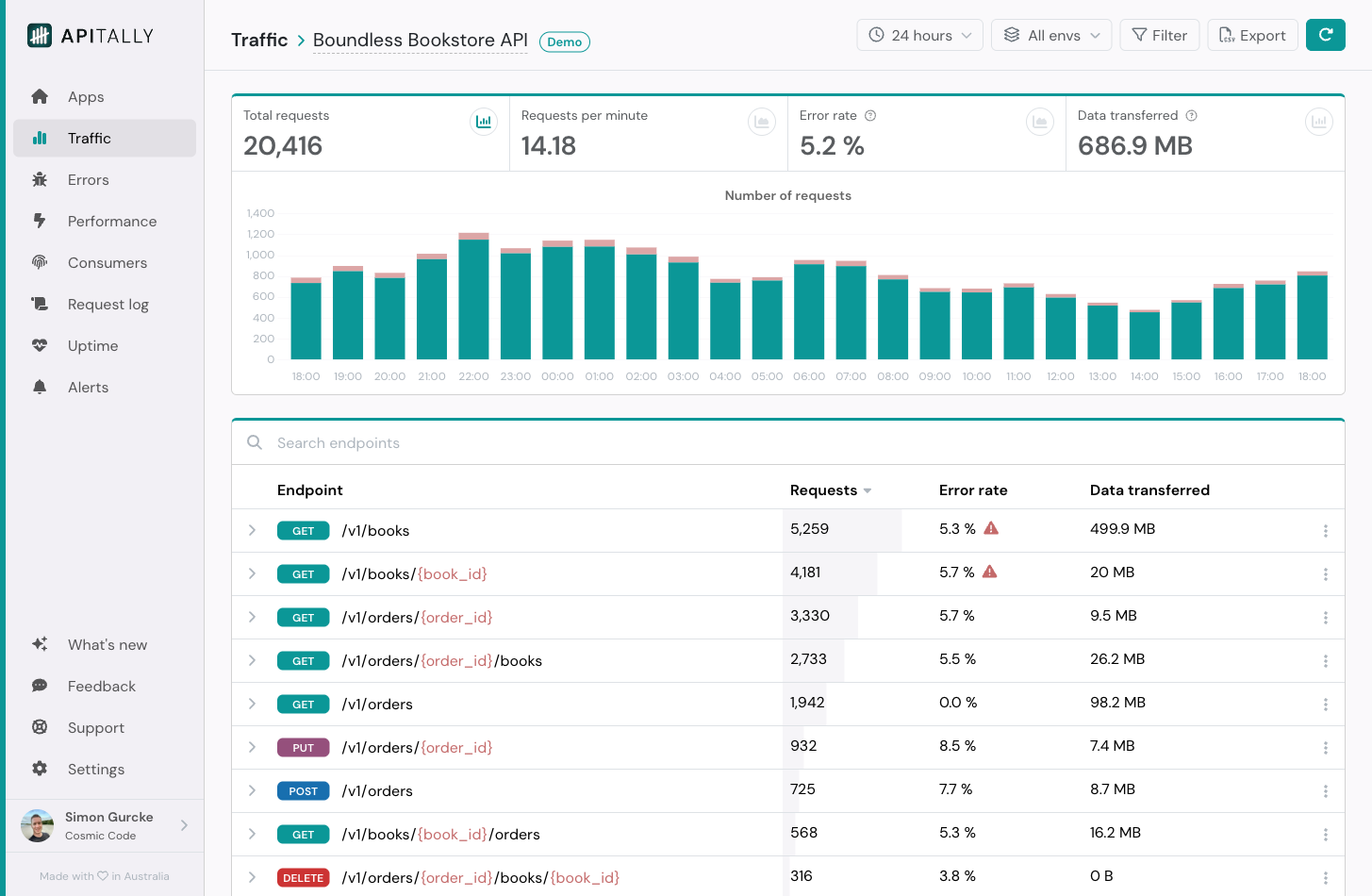

Apitally is a simple API monitoring and analytics tool that makes it easy to understand how your APIs are used and helps you troubleshoot API issues faster. Setup is easy and takes less than 5 minutes.

Learn more about Apitally on our 🌎 website or check out the 📚 documentation.

This SDK is for APIs running on Cloudflare Python Workers and relies on Logpush to send data to Apitally.

Track traffic, error and performance metrics for your API, each endpoint and individual API consumers, allowing you to make informed, data-driven engineering and product decisions.

Drill down from insights to individual API requests or use powerful search and filters to find specific requests. View correlated application logs for a complete picture of each request, making troubleshooting faster and easier.

Understand which validation rules in your endpoints cause client errors. Capture error details and stack traces for 500 error responses.

Get notified immediately if something isn't right using custom alerts and synthetic uptime checks. Alert notifications can be delivered via email, Slack and Microsoft Teams.

| Framework | Supported versions | Setup guide |

|---|---|---|

| FastAPI | >=0.116.1 |

Link |

Apitally also supports many other web frameworks in Python, JavaScript, Go, .NET and Java via our other SDKs.

If you don't have an Apitally account yet, first sign up here.

Create an app in the Apitally dashboard and select FastAPI (Cloudflare Workers) as your framework. You'll see detailed setup instructions, which also include your client ID.

Log in to the Cloudflare dashboard and navigate to Analytics & Logs > Logpush. Create a Logpush job with the following settings:

| Setting | Value |

|---|---|

| Destination | HTTP destination |

| HTTP endpoint | https://hub.apitally.io/v2/{client-id}/{env}/logpush |

| Dataset | Workers trace events |

| If logs match... | Filtered logs: EventType equals fetch and ScriptName equals {your-worker-name} |

| Send the following fields... | General: Event, EventTimestampMs, Logs |

In the HTTP endpoint, replace {client-id} with your app's client ID and {env} with the environment (e.g. prod or dev). In the filter criteria, replace {your-worker-name} with the name of your Worker, as specified in your Wrangler config.

Enable Workers Logs and Logpush in your wrangler.toml configuration file:

logpush = true

[observability]

enabled = true

head_sampling_rate = 1

[observability.logs]

invocation_logs = trueInstall the SDK:

uv add apitally-serverlessThen add the Apitally middleware to your FastAPI application:

from fastapi import FastAPI

from apitally_serverless.fastapi import ApitallyMiddleware

app = FastAPI()

app.add_middleware(

ApitallyMiddleware,

log_request_headers=True,

log_request_body=True,

log_response_body=True,

)For further instructions, see our setup guide for FastAPI on Cloudflare Workers.

See the SDK reference for all available configuration options, including how to mask sensitive data, customize request logging, and more.

If you need help please create a new discussion on GitHub or email us at support@apitally.io. We'll get back to you as soon as possible.

This library is licensed under the terms of the MIT license.